From Algorithmic and Reinforcement Learning-Based to LLM-Powered Agents by Prof Bo An

IAS@NTU Discovery Science Seminar Jointly Organised with the Graduate Students' Clubs

On 10 October 2025, the Institute of Advanced Studies (IAS), along the Graduate Students' Clubs of the College of Computing and Data Science (CCDS), and the School of Mechanical and Aerospace Engineering (MAE), jointly hosted a seminar under the IAS Discovery Science Seminar series, featuring Prof Bo An, a leading researcher in artificial intelligence, game theory, and multi-agent decision systems at CCDS, NTU. The session provided an overview of how artificial intelligence has evolved from early distributed sensing networks and multi-agent coordination to the modern frontiers of reinforcement learning (RL) and large language model (LLM)-powered agents, illustrating how theoretical foundations have progressively shaped practical innovations.

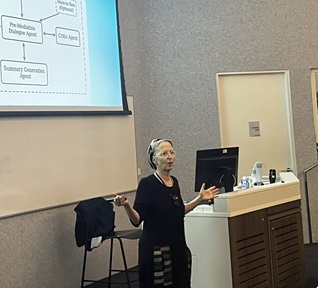

Prof An explains how early defence sensor networks shaped today’s collaborative intelligent systems.

Prof An explains how early defence sensor networks shaped today’s collaborative intelligent systems.

Prof An opened by tracing the historical origins of multi-agent systems, recalling the era when distributed sensors were deployed to track moving vehicles and required multiple agents to collaborate in real time. These early studies, many supported by defence research, introduced fundamental principles of cooperation and communication that remain at the heart of today’s autonomous systems. By highlighting how simple sensing mechanisms inspired the concept of collaborative intelligence, he drew a clear lineage from early rule-based control to adaptive, data-driven learning.

Transitioning to applied research, Prof An revisited the evolution of security games and pursuer–evader games (PEGs), which were developed to improve strategic decision-making in response to the heightened security challenges that followed the 9/11 terrorist attacks on the World Trade Center in New York back in 2001. These frameworks model adversarial interactions in real-world security contexts, such as optimizing patrol routes on a map of Singapore to intercept evasive targets. His team’s Deep RL-based PEG models such as NSGZero, extended these principles by enabling scalable, adaptive behaviour in highly complex environments. Such systems, he noted, demonstrate how reinforcement learning can transform theoretical game dynamics into deployable decision-support tools for large-scale security and resource allocation challenges.

Prof An engages the audience, illustrating real-world reinforcement learning applications.

Prof An engages the audience, illustrating real-world reinforcement learning applications.

As the talk progressed, attention shifted toward industry-driven reinforcement learning, where academic research meets real-world data systems. Prof An discussed applications ranging from fraud detection and ride-hailing optimisation to recommender systems and user-engagement models. In one example, he illustrated how reinforcement learning is used to optimise user retention by evaluating multiple user trajectories, which is a concept akin to Reinforcement Human Feedback (RHF), to infer which interactions most effectively sustain user interest. He observed that the central challenge lies in the inherently dynamic and unpredictable nature of user behaviour, which demands new adaptive metrics such as AURO to ensure consistent system performance.

Financial technology formed another focal point of the session. Within the Trading Master Project, Prof An’s group applied the Mixture-of-Experts (MoE) paradigm, where this is also adopted by DeepSeek to construct multiple specialised agents, each tuned for a particular market condition. A higher-level “meta-agent” dynamically orchestrates these experts, forming what he described as an agent orchestra, capable of responding intelligently to bull, bear, or volatile regimes. Complementary research included the development of Market-GAN and PRUDEX-Compass, platforms that simulate market environments and evaluate strategy performance, achieving up to 89 percent alignment and 36 percent predictive improvement over existing baselines. Extending these ideas, he discussed work on FinAgent, an autonomous daily trading assistant, and Synapse, a framework that accelerates learning by reusing high-quality decision paths. These systems, alongside Cradle, which models human–AI interaction in gaming contexts beyond conventional APIs, exemplify Prof An’s philosophy of use-inspired research to balance theoretical depth with practical relevance.

A packed lecture theatre listens as Prof An presents breakthroughs in multi-agent research.

A packed lecture theatre listens as Prof An presents breakthroughs in multi-agent research.

From a methodological standpoint, the session highlighted new algorithmic directions, notably Gradient Regularised Policy Optimisation (GRPO), an advancement over PPO that stabilises training by averaging multiple trajectories. Prof An also discussed the Agent Orchestra benchmarking initiative, which evaluates collaborative performance across heterogeneous agents, underscoring how structured benchmarking is essential for sustained progress in multi-agent research.

The talk culminated in a forward-looking discussion on LLM-powered agents, which merge the reasoning capabilities of large language models with the adaptability of reinforcement learning. Prof An described these systems as a natural progression of AI, moving from handcrafted algorithms to self-learning agents, and now towards architectures capable of abstract reasoning and self-correction. Such agents, he explained, are already transforming fields ranging from financial analytics and robotics to digital governance and scientific discovery. They represent the convergence of scalability, generalisability, and interpretability, which are the three main pillars to define the future of intelligent systems.

.jpg?sfvrsn=869da7e4_1) The Q&A following the talk explores LLM-powered agents, AI scalability, and iterative improvement.

The Q&A following the talk explores LLM-powered agents, AI scalability, and iterative improvement.

Reflecting on the broader arc of AI research, Prof An reminded the audience that the path forward remains one of continuous refinement rather than perfection. “Every model is wrong; there’s no perfect model,” he remarked, emphasising that progress in AI depends on iterative improvement and critical interpretation. The session concluded with an engaging dialogue between Prof An and the attendees, who discussed the shifting boundaries between academic theory, industrial deployment, and the coming era of agentic intelligence.

By connecting foundational mathematics, practical system design, and philosophical reflection, Prof An’s talk captured the essence of contemporary AI research, which is considered as an endeavour defined not only by algorithms and architectures, but by the collaboration between humans, machines, and the dynamic environments they seek to understand.

Written by: Goh Si Qi | NTU School of Computing and Data Science Graduate Students' Club

“I think the future of LLM powered agents will be the introduction to multi-step reasoning at every stage of the pipeline” - Gilbert Lesmana (PhD student, IGP)

“Professor An’s view of multi-agent coordination as an evolving equilibrium driven by iterative feedback rather than static convergence, where it captures the essence of scalable intelligence in complex environments.” - (PhD student, CCDS)

“The concept of reinforcement learning feels deeply relevant to autonomous materials design that our models must also learn from failure and adapt to changing experimental conditions.” - (PhD student, MSE)