Half-Body Portrait Relighting with Overcomplete Lighting Representation

- A photo-editing method, based on deep learning, that can be used to retrospectively modify the apparent lighting conditions in a half-body portrait photo of a person

While professional photographers may take the time to set up perfect portrait shots meticulously, most amateurs (i.e. anyone with a smartphone) do not have the skill or patience to do so. This method can help by allowing portrait photos taken in ugly lighting conditions to be digitally modified to change to more desirable lighting conditions.

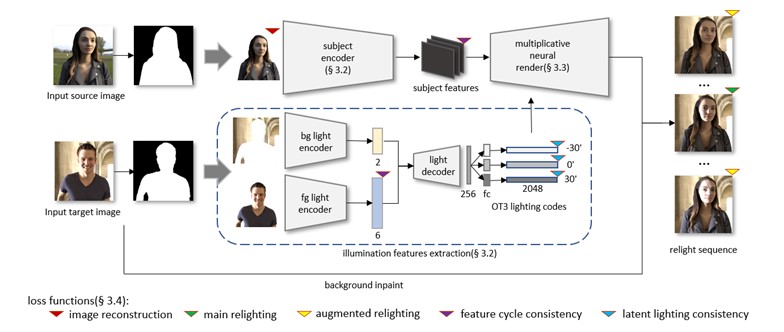

We employ an indirect form of inverse rendering, using deep learning. We highlight three key innovations that differentiate us from existing methods:

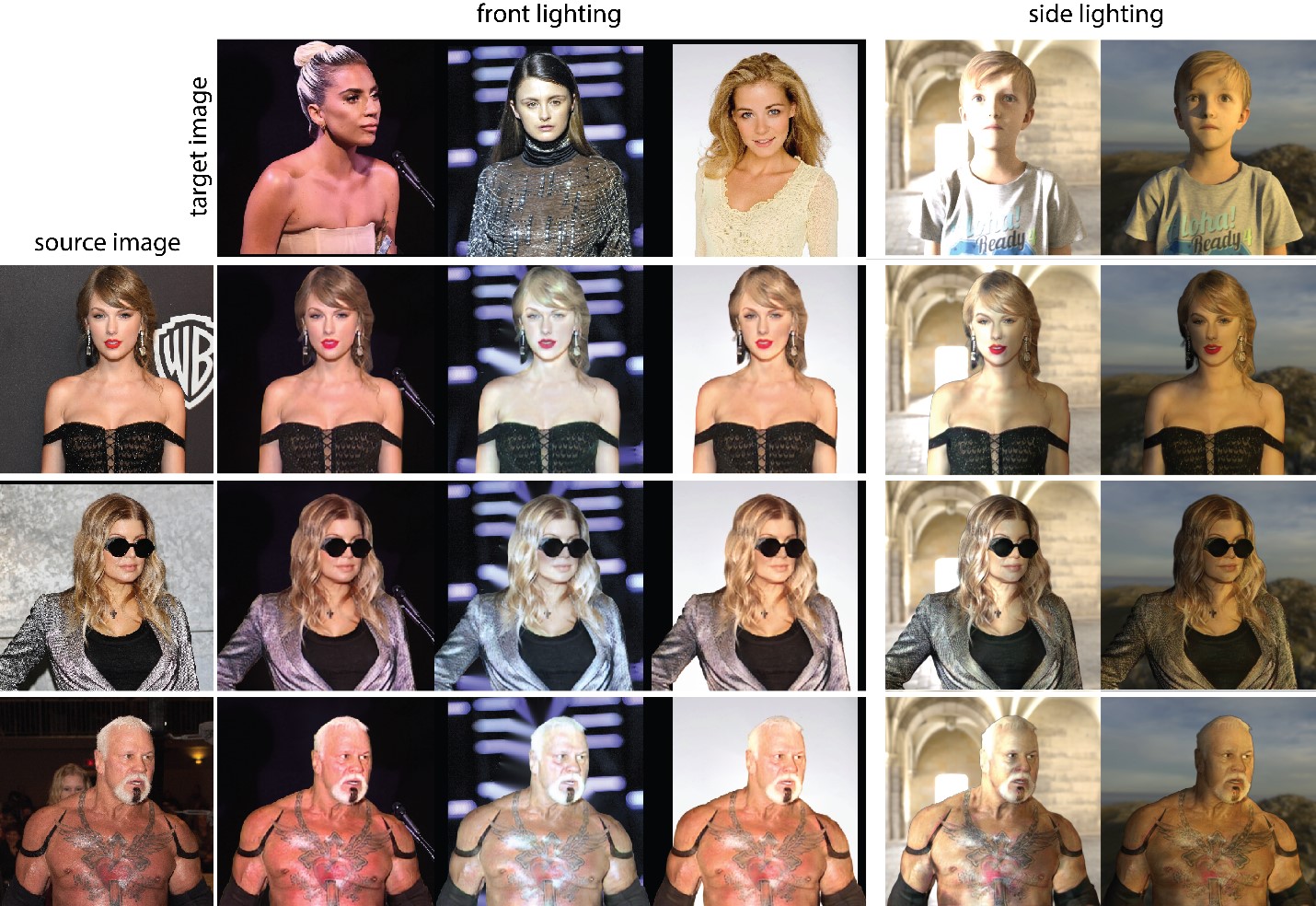

- We introduce a novel overcomplete lighting representation in neural latent space, denoted OT3, which facilitates lighting interpolation in latent space as well as regularizes the self-organization of the lighting latent space. Unlike the use of other latent representation methods which at best can be used for relighting with a single lighting condition, our OT3 representation allows us to easily interpolate and extrapolate to generate a sequence of relighted images over multiple lighting rotations, from only a single target lighting reference image.

- We propose a novel multiplicative neural render for combining subject and lighting latent codes. The multiplicative neural render uses multiplication and addition over four neural layers to combine the subject and lighting latent codes for relighting. This leads to significantly improved results compared to conventionally combining latent codes by concatenation.

- For encoding the lighting in the target reference image, we use separated foreground and background lighting encoders. The reason is that in a portrait image, the appearance of the subject is mainly modulated by the foreground lighting, i.e., the illumination behind the camera, but is hard to estimate. The background has a secondary role, but may provide correlation cues to help determine the foreground lighting. These two encoders have different channel sizes to reflect different significance. This leads to improved results as compared to encoding the entire image, which is the typical approach.

A mainstream approach to relighting is via explicit inverse rendering, which explicitly estimates normals, albedo and lighting, but this is an ill-conditioned problem. Often highly-constrained models are used, but these may not be accurate, even with deep learning approaches. There are also a number of existing approaches that also do implicit inverse rendering. The highly technical differences of these approaches are referenced and explained in more detail in our journal paper.

Retroactive filtering of poorly captured images is in high demand, e.g., for selfie beautification. Being able to conduct post-processed portrait relighting is expected to be of widespread interest among large groups of ordinary users, especially since it will provide new capabilities not yet available. This may for example take the form of a smartphone app. In addition, for vertical markets, this is also highly applicable to film editing, telepresence and augmented reality in less-restrictive scenarios, where lighting cannot be easily controlled or pre-planned. In such applications, a standard task is to composite or embed a person / object from one scene into another scene, with different lighting conditions. Good relighting capability is highly crucial to maintaining realism of the composition.

Source of images: CelebA dataset (https://mmlab.ie.cuhk.edu.hk/projects/CelebA.html)

Song, G., Cham, T.-J., Cai, J. and Zheng, J. (2021), Half-body portrait relighting with overcomplete lighting representation. Computer Graphics Forum, 40: 371-381. (Link)