Domain Adaptive Video Segmentation via Temporal Consistency Regularization

- The first work that tackles the challenge of unsupervised domain adaptation in video semantic segmentation

Video semantic segmentation is an essential task for the analysis and understanding of videos. Recent efforts largely focus on supervised video segmentation by learning from fully annotated data, but the learnt models often experience clear performance drop while being applied to videos of a different domain.

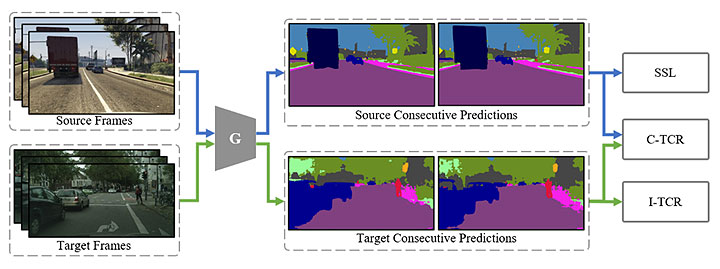

The novelties of our method can be summarized in three major aspects. First, it is a new framework that introduces temporal consistency regularization (TCR) to address domain shifts in domain adaptive video segmentation. Second, it designed inter-domain TCR and intra-domain TCR that improve domain adaptive video segmentation greatly by minimizing the discrepancy of temporal consistency across different domains and different video frames in target domain, respectively. Third, extensive experiments over two challenging benchmarks show that our method achieved superior domain adaptive video segmentation as compared with multiple baselines.

Previous works on video semantic segmentation required large amounts of densely annotated training videos which entail a prohibitively expensive and time-consuming annotation process.

Our method consists of a video semantic segmentation model G that generates segmentation predictions, a source-domain supervised learning module (SSL) that learns knowledge from source domain, a cross-domain TCR component (C-TCR) that guides target predictions to have similar temporal consistency as source predictions, and an intra-domain TCR component (I-TCR) that guides unconfident target predictions to have similar temporal consistency as confident target predictions.

This model can be applied to video analytics for autonomous driving, workspace security and etc.

Dayan Guan, Jiaxing Huang, Aoran Xiao, Shijian Lu*. "Domain adaptive video segmentation via temporal consistency regularization." In Proceedings of the IEEE/CVF International Conference on Computer Vision. 2021. (Link)

Tags: