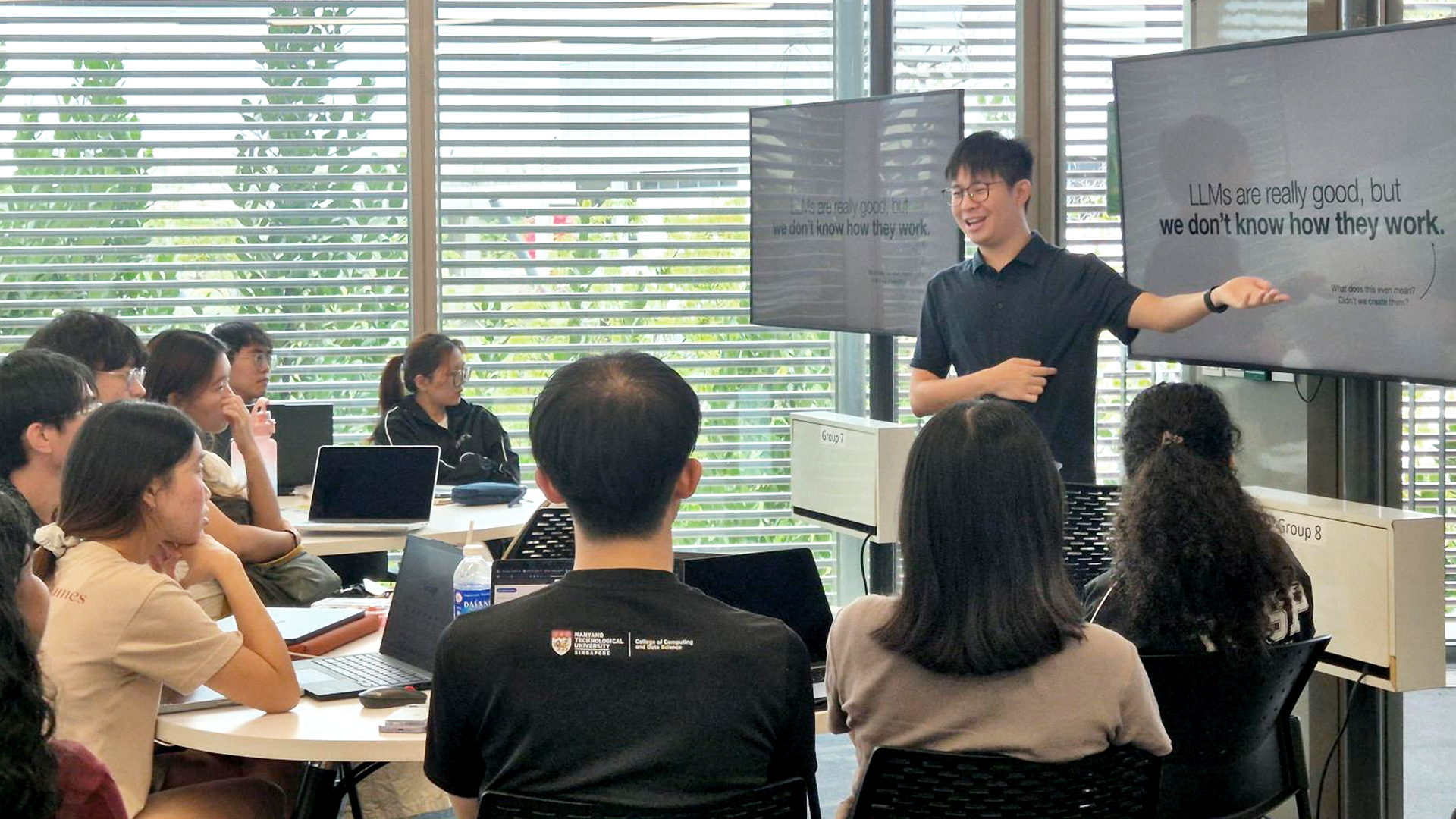

TAISP Talk on Mechanistic Interpretability with Clement Neo

Last week, the Turing AI Scholars Programme (TAISP) welcomed Clement Neo, researcher at NTU’s Digital Trust Centre / Singapore AI Safety Institute, for an engaging talk on mechanistic interpretability — the science of understanding what really happens inside large language models (LLMs).

Understanding the Black Box

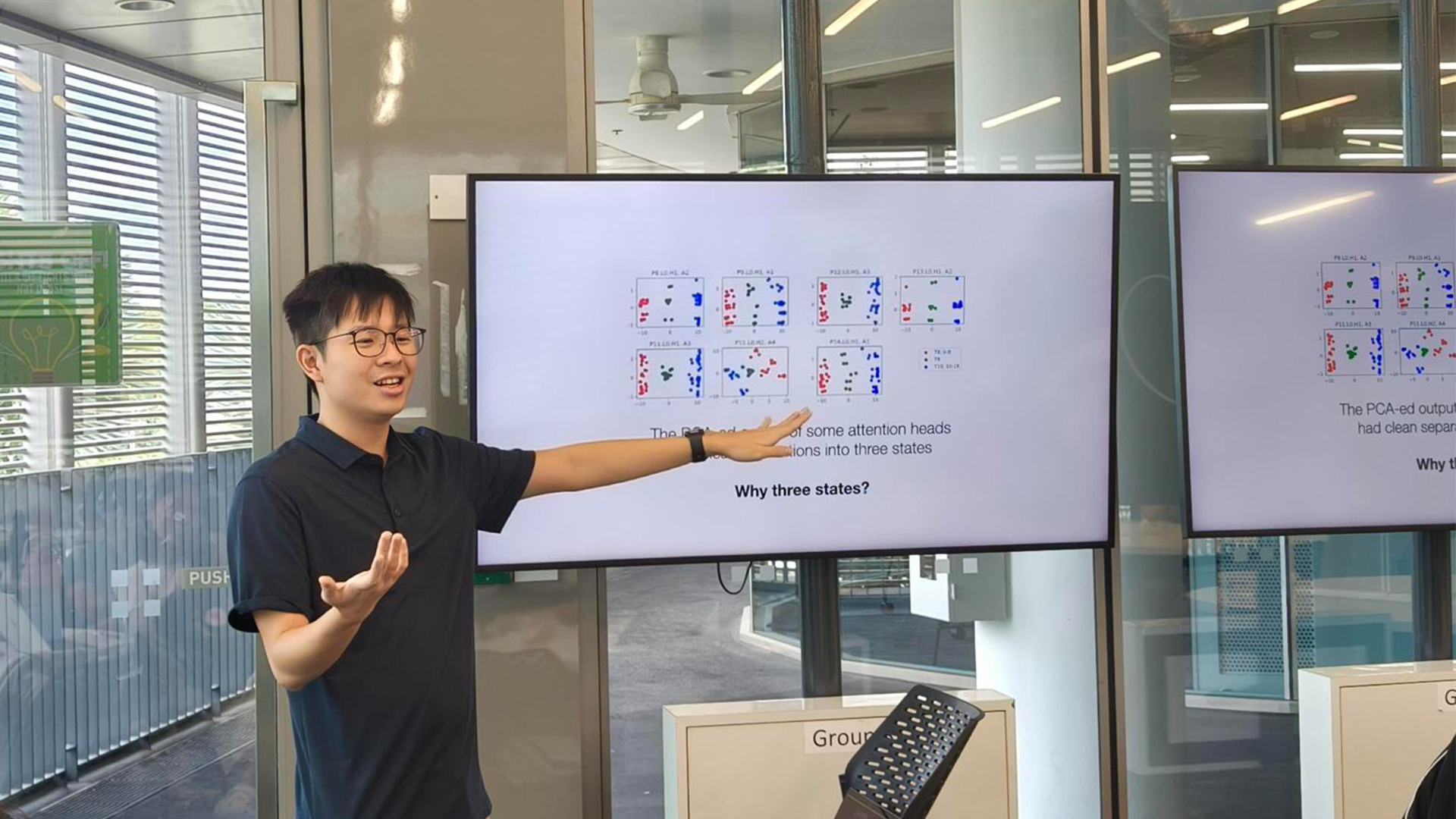

Clement began by highlighting the remarkable progress of modern LLMs. From achieving International Mathematical Olympiad gold medal standards to explaining complex topics across disciplines, these models keep getting smarter. Yet, they remain as black boxes — with layers of attention and hidden states obscuring the explainability of information.

How Models Really Think

To make this point vivid, Clement shared examples of counterintuitive behaviors found in LLMs:

Mathematical Operations: While humans solve addition step by step, transformers have to do it in parallel and often, even making predictions ahead of time. As a result of their architecture, solutions may seem unnatural or illogical.

Refusal Mechanisms: Safety features in today’s models, often trained through RLHF, rely on a single refusal direction. By removing this component, researchers found the model stops refusing harmful prompts. This explains why jailbreaks can easily bypass safeguards — the underlying refusal mechanism is simply a shallow vector direction (“Sorry, I cannot…”).

Explainer Models: Researchers use sparse autoencoders (SAEs) and sparse feature circuits to understand these unintuitive behaviors at scale. SAEs identify meaningful features in the model, while sparse feature circuits map how these features interact through the network. Together, these tools offer hope for explaining the counterintuitive strategies that LLMs employ across millions of parameters.

Mechanistic interpretability helps researchers trace these models, giving us insight into why a model produces a certain output. That understanding is key to making AI systems safer.

Research as Curiosity and Play

Beyond the technical content, Clement’s message carried an inspiring note: that research in AI safety, or research in general, need not be intimidating. Instead, it can be fun, exploratory, and curiosity-driven. His encouragement to the scholars was simple yet powerful: if you have a question about how LLMs work, try building something, run experiments, and learn by doing.

Looking Ahead

The session left TAISP scholars with both concrete insights into the strange inner workings of LLMs and a broader vision of research as an open invitation. Talks like these not only demystify advanced AI concepts but also nurture our next generation of researchers, engineers, and thinkers who will shape the safe and responsible future of AI.