Low-Resolution Information Matters: Learning Multi-Resolution Representations for Person Re-Identification

Synopsis

This technology, leveraging augmented intelligence, explores the influence of resolutions on feature extraction and develops a novel method for cross-resolution person re-identification (re-ID). In crowded spaces such as airports, stadiums, or shopping malls, person re-ID can assist in crowd management and safety.

Opportunity

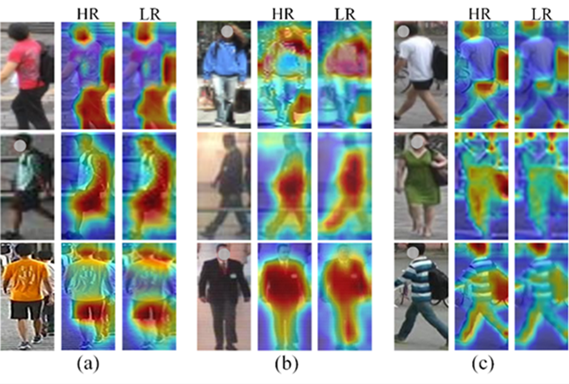

- Existing methods only focus on recovering higher resolution images and extracting high-resolution (HR) feature representations. Although the complementary details generated by super-resolution (SR) enhance the visual quality of person images, these details may not represent actual human characteristics. Therefore, in some cases, the features extracted solely from these generated HR images are not discriminative enough to match the correct individuals.

- Although local details are lost in low-resolution (LR) images, LR images still contain valuable global information, such as body shape and colour, which can complement HR features afflicted with false details. Existing methods neglect the useful information contained in LR images.

- Most existing methods process gallery and query images with different strategies because they tacitly assume that all gallery images are HR while all query images are LR. However, in practical scenarios, the distinction between gallery and query sets is often blurred, making it challenging to definitively classify images as HR or LR.

The proposed method addresses these challenges by handling images taken at diversified resolutions in practical settings.

Technology

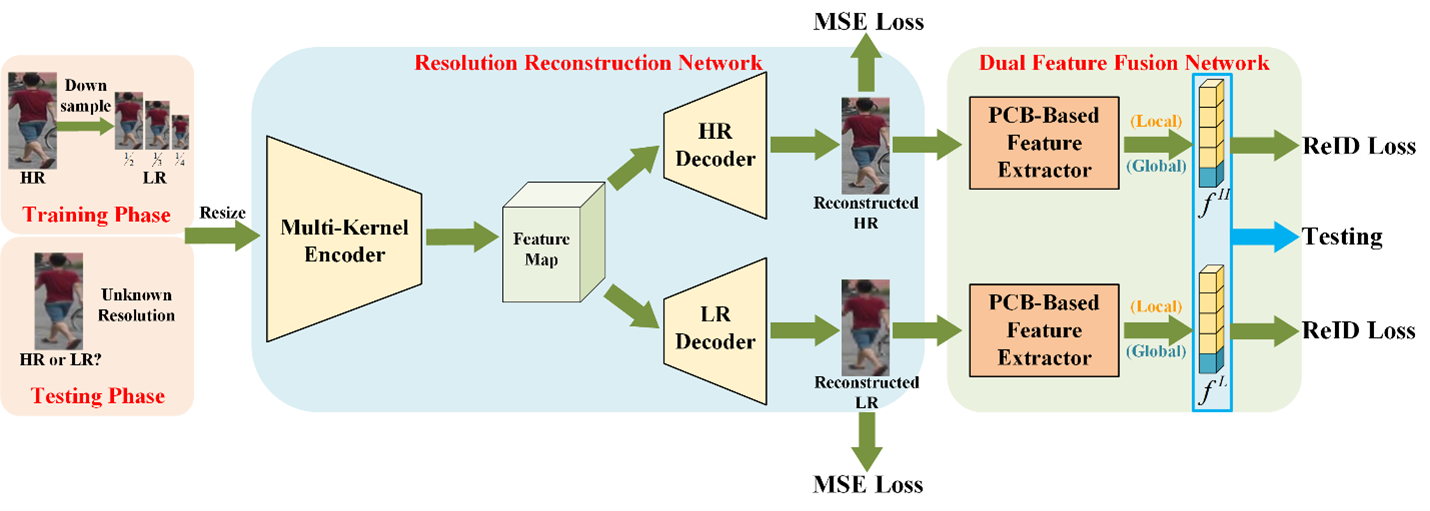

We propose a novel multi-resolution representations joint learning (MRJL) technology for cross-resolution person re-ID, which is made up of two sub-networks - resolution reconstruction network (RRN) and dual feature fusion network (DFFN).

In order to generate both HR and LR images for an input image with unknown resolution, we design the RRN module which is made up of an encoder and two independent decoders. The encoder is utilised to extract the feature map from an input image, and the two decoders reconstruct the feature map into HR version and LR version, respectively.

The DFFN module adopts a dual-branch structure to extract both the HR and LR feature representations from the corresponding generated images. Notably, the two branches operate with distinct parameter weights. During testing, computation of joint HR and LR feature representations is performed. These representations are concatenated and utilised in the distance measure. The procedure adopted in the method is shown in Figure 1.

Figure 1: The architecture of the proposed multi-resolution representations joint learning (MRJL). This framework consists of two jointly trained sub-networks, resolution reconstruction network (RRN) and dual feature fusion network (DFFN). The former is used to reconstruct input images into two versions with different resolutions, and the latter is used to extract feature representations from the generated high-resolution (HR) and low-resolution (LR) images.

Figure 2: Examples of feature response maps extracted on different resolution samples. All the cases are classified into three groups.

Applications & Advantages

- Versatile application domains: Combatting crime, public management (e.g., finding missing persons), even in low-resolution scenarios.

- Multi-resolution learning: Efficiently leverages low-resolution (LR) features to augment high-resolution (HR) features.

- Resolution-agnostic structure: Input image resolution need not be known, accommodating diverse scenarios seamlessly.

- Unified feature extraction: RRN and DFFN processes images of varying resolutions uniformly, ensuring consistent feature representations.

.tmb-listing.jpg?Culture=en&sfvrsn=462ec612_1)