The AI Pivot: Redefining Product Strategy for Fintech & UX

(From left) Hanson Yap, Programme Director, NTU PACE, Alvin Eng, Head of Enterprise AI, Innovation Group, UOB, Dr Ernie Teo, Senior Lecturer, NTU, Professor Boh Wai Fong, Vice President (Lifelong Learning & Alumni Engagement), Dr Chloe Gu, Senior Lecturer, NTU, Chooake Wongwattanasilpa, Chief Experience Officer, Bank of Singapore.

(From left) Hanson Yap, Programme Director, NTU PACE, Alvin Eng, Head of Enterprise AI, Innovation Group, UOB, Dr Ernie Teo, Senior Lecturer, NTU, Professor Boh Wai Fong, Vice President (Lifelong Learning & Alumni Engagement), Dr Chloe Gu, Senior Lecturer, NTU, Chooake Wongwattanasilpa, Chief Experience Officer, Bank of Singapore.

The second installment of the PACE Forward Series featured an engaging panel discussion and a keynote presentation exploring how artificial intelligence is redefining product management in the fields of fintech and user experience (UX), highlighting the weight of transparency, usability and cogency. Co-hosted by NTU Academy for Professional and Continuing Education (NTU PACE) and NTU Office of Alumni Engagement, the event brought together current and aspiring product managers at the Lifelong Learning Institute.

From blockchain to generative AI, innovation has shifted from being a differentiator to becoming a core driver of business strategy. Yet one truth remains constant: even the smartest tech falls short without clear vision and execution.

The true measure of impact lies in strategic product management, which means understanding user pain points, aligning technology with real-world needs, and ensuring that innovation delivers value without compromising experience or ethics.

What’s Top of Mind for Product Teams Today

In an era where AI promises limitless potential, the temptation to hop on the AI bandwagon is strong. Meaningful adoption begins by addressing the user problem, not by focusing on the technology. Product managers must ask: What friction am I solving? Where do users encounter challenges, or where do decision-making processes slow down?

Product managers today wear many hats. While they may not be specialists in data science or design, they are expected to navigate conversations around metrics, feasibility, and ethics with confidence. Feasibility demands a practical understanding of what can be built within technical, financial, and operational constraints, while ethics calls for awareness of how products impact users, data privacy, and society at large.

Bridging disciplines, product managers need to translate complexity into clarity, ensuring every AI-driven solution is both explainable and impactful. For instance, in a banking app, they might work with data teams to explain AI-driven spending alerts in simple language, helping customers make informed decisions with confidence.

Across industries, product teams are grappling with what AI means for their work. Individually, teams are experimenting with new AI-powered tools integrated into their daily workflows. Product managers are using analytics assistants that generate insights and recommendations automatically. Designers are turning to platforms like Figma to generate mock-ups from text prompts. Developers are adopting AI coding companions such as GitHub Copilot, which have been shown to increase productivity. From auto-completing functions to explaining unfamiliar code or generating test cases, these tools free up time for creativity and higher-level problem-solving.

At a team level, new AI-enabled development platforms are also changing the pace and economics of product creation. Low-code tools that generate apps from simple inputs are reducing the cost of experimentation and lowering barriers to prototyping. This shift is reshaping how teams approach development speed and the skills required to deliver effectively.

Most product teams face a steep learning curve with AI. Many initiatives fail not for lack of capability, but because it is applied to the wrong problems — improvements that are not of overt concern for users. AI excels at augmentation, automation, and personalisation, yet struggles where trust, transparency, and explainability are critical. In regulated sectors like finance, opaque or inconsistent outputs can quickly undermine confidence and create risk.

Product leaders must therefore be deliberate about where and how to integrate AI. AI is not a one-size-fits-all solution; its impact varies by feature. The real work lies in identifying problems where AI adds measurable, incremental value. One example is the use of AI-driven credit scoring models in banking. While these models can enhance efficiency and accuracy, many rely on complex neural networks that offer limited insight into their decision-making process. When customers are denied credit without clear explanations, both trust and regulatory compliance suffer. Banks have since shifted toward hybrid or interpretable models that balance predictive power with transparency.

As organisations embrace AI, questions about structure and capability are emerging. Should companies establish dedicated AI product teams, or should existing teams integrate AI features into their workflows? This debate mirrors the early days of mobile product design, when teams had to decide whether to build specialised mobile-focused teams or embed mobile-first thinking across all teams. Over time, mobile integration became universal. A similar trajectory may unfold with AI. As tools become more accessible, AI will increasingly be embedded within every team’s work rather than isolated to a single function.

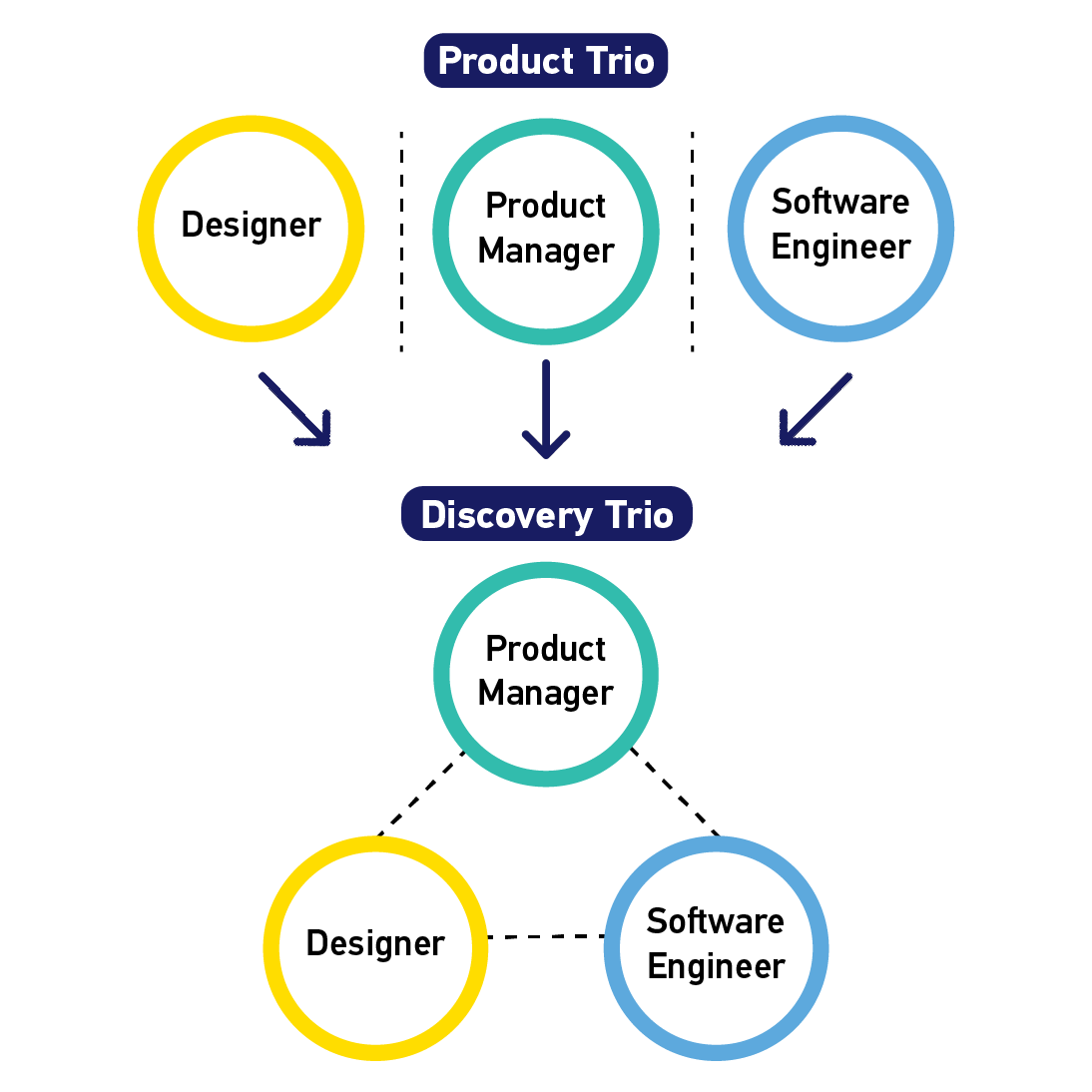

This transformation is also influencing team size and roles. With automation handling more of the repetitive work, smaller, cross-functional groups are becoming the norm. The classic “Product Trio” of a product manager, designer, and software engineer may evolve into what some call a “Discovery Trio” — a much more adaptive group focused on understanding user problems rather than simply building solutions.

Smaller teams do not mean fewer opportunities. Lower development costs and easier access to AI tools will likely trigger an explosion of new products and startups. What will matter most is judgment: knowing what to build and why it matters.

Building Trust Through Design and Data

Responsible AI use requires robust guardrails. Organisations benefit from clear guiding principles that keep innovation purposeful, accountable, and explainable.

AI can amplify both value and risk. Over-reliance on outputs without human oversight can erode critical thinking or perpetuate systemic bias. Hence, “human in the loop” frameworks remain essential — allowing judgment, empathy, and context to complement automation. It is also crucial to embed operational responsibility though delivering upon measurable ROI while ensuring that every deployment enhances rather than endangers user trust.

Digital trust is centred around good user experience. In the financial industry, UX is not just about elegant interfaces; it is about emotional assurance and functional clarity. Users must feel in control and confident that systems work in their best interest. For example, when product teams used real-time behavioural data to discover that most users logged in only briefly to check their account balance, they introduced a “peak” feature that displayed it at a glance. It was a small but powerful change that met users exactly where they were, bringing about a convenience that is close to their heart.

Conversely, AI tools that lack transparency risk alienating users. In mobile banking applications that integrate robo-advisory services, a well-designed interface cannot compensate for an AI-based model that operates as a “black box” in the eyes of the consumer. Experience has shown that when users cannot understand the rationale behind investment recommendations, they experience lower trust and engagement, even if the tool’s performance is objectively strong. A lack of transparency of how portfolio recommendations are derived can impede adoption and lead users to revert to human advisors or even abandon use of an app altogether. In regulated sectors where accountability and explainability are critical, trust not novelty remains the decisive factor in sustained adoption.

Leadership in the age of AI demands clarity of focus and courage of conviction. It requires aligning across functions, navigating regulatory change, and maintaining operational discipline while still keeping pace. Foresight is not about predicting the future, but about recognising emerging patterns, anticipating ripple effects, and acting with purpose to shape what comes next.

The best leaders prioritise progress over perfection — experimenting thoughtfully, learning quickly, and scaling only what works. The goal is not to automate everything but to build systems that augment human judgment, creativity, and trust. In a landscape where innovation can sometimes feel performative, lasting impact lies in pursuing progress that is both meaningful and sustainable.