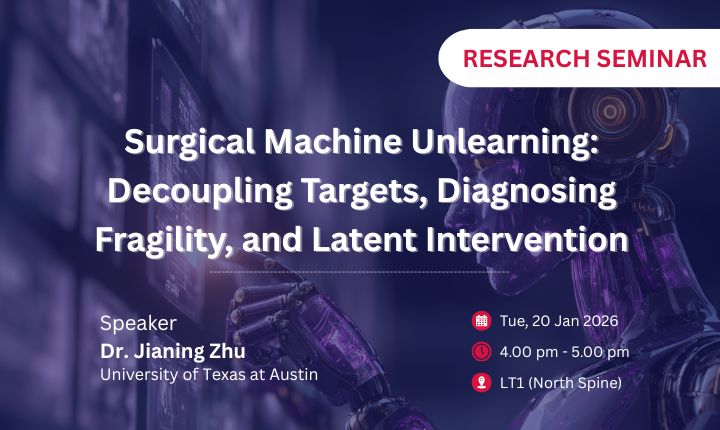

Surgical Machine Unlearning: Decoupling Targets, Diagnosing Fragility, and Latent Intervention by Dr. Jianing Zhu

Abstract:

Machine unlearning seeks to remove specific data or behaviors from trained models to meet privacy, safety, and regulatory demands. However, existing methods often treat unlearning as a blunt data-removal problem, overlooking how knowledge is structured inside modern models. In this talk, I will present a representation-aware view of unlearning, showing that what a model forgets—and what it unintentionally loses—depends on target definitions, evaluation protocols, and internal model structure. By analyzing unlearning in classical deep learning tasks and large language models, I illustrate why forgetting is inherently non-uniform and how lightweight, targeted interventions can enable more precise and reliable unlearning.

Speaker Bio:

Jianing Zhu is a Postdoctoral Fellow at the University of Texas at Austin (https://zfancy.github.io/), advised by Professor Atlas Wang. His research interests lie in building human-aligned machine intelligence by advancing robustness, reliability, and transparency in modern machine learning systems, with a recent focus on machine unlearning. He has published papers in top-tier machine learning venues, including NeurIPS, ICML, and ICLR, and his research was recognized with the TrustAI Rising Star Award in 2025.