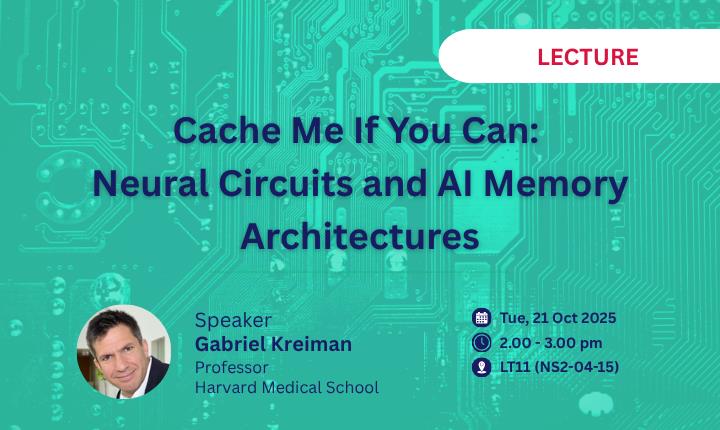

Cache Me If You Can: Neural Circuits and AI Memory Architectures by Prof Gabriel Kreiman

Abstract

AI has made major strides in processing sensory information such as computer vision. The last few years have also seem remarkable progress in the ability of machines to use language. One of the next verticals that we need to conquer in AI is Memory. Our memories are the basic fabric of who we are. Memories constitute a highly compressed, abstract, and dynamic representation of our lived experiences spanning decades. I will give a brief overview of what is known about how humans store memories at the behavioral level, followed by research efforts to elucidate the neural mechanisms by which the brain encodes and retrieves memories. I will discuss current efforts to develop memory systems in AI and contrast them with the properties of human-like memory consolidation and retrieval.

Speaker Bio

Gabriel Kreiman is a Professor at Harvard Medical School, and the Center for Brains, Minds and Machines. He received his M.Sc. and Ph.D. from Caltech and was a postdoctoral fellow at MIT. He received the NSF career award and NIH New Innovator award. His research focuses on computational neuroscience and AI approaches to vision, learning, and memory. He is also founder and CEO of Memorious.