A Universal Control Framework for Human-Robot Interaction with Guaranteed Human Safety and Hierarchical Task Consistency

Synopsis

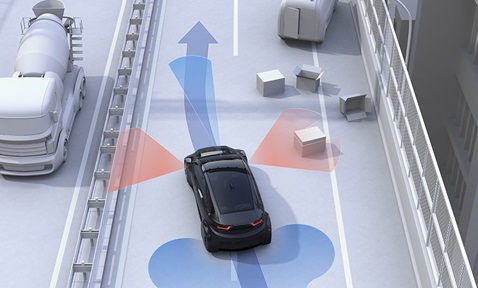

This Unified Control Framework for Human-Robot Interaction proposes a flexible control method for robots collaborating with humans. It uses a potential energy function to create a stable controller adaptable to various tasks and interaction scenarios. Customisable parameters define robot behaviours. This technology enhances human-robot collaboration in manufacturing, education, healthcare, and entertainment, improving productivity and user experience while reducing the need for user intervention and sensor calibration.

Opportunity

Human-robot interaction (HRI) is a growing field exploring how humans and robots can cooperate in various domains, such as manufacturing, education, healthcare, and entertainment. Designing effective and safe robot control systems that adapt to different tasks and environments poses significant challenges. A unified control framework for HRI aims to develop a general and flexible control method that can handle different robot and interaction tasks with minimal user intervention.

The commercial potential of this technology is significant, as it enables more efficient and reliable human-robot collaboration across various domains. The framework can enhance manufacturing processes by allowing robots to switch smoothly between tasks and collaboration modes with humans. It also enhances the user experience with social robots, enabling natural and intuitive communication and interaction. Additionally, the framework facilitates robot deployment and integration in different scenarios, reducing the need for user intervention and sensor calibration.

Technology

The unified control framework for human-robot interaction proposes a general and flexible control method for robots working with humans in various scenarios. Using a potential energy function, it derives a stable controller capable of handling different robot and human-robot interaction tasks. Customisable task parameters and interactive weights specify robot interaction behaviours according to different applications.

The framework includes a learning algorithm to estimate the unknown kinematics of external sensors, such as vision systems, improving robots’ perception and reaction. It applies to existing robot control systems with different control modes, such as velocity control or torque control.

The technology enables efficient and reliable human-robot collaboration across various domains. It can improve manufacturing quality and productivity by allowing robots to switch tasks and collaboration modes smoothly. It enhances user experience and satisfaction with social robots by enabling natural and intuitive communication. Additionally, the framework facilitates robot deployment and integration in different scenarios, reducing the need for user intervention and sensor calibration.

Applications & Advantages

- Handles different robot and interaction tasks with minimal user intervention.

- Improves manufacturing quality and productivity by allowing robots to switch tasks and collaboration modes smoothly.

- Enhances user experience and satisfaction with social robots by enabling natural and intuitive communication.

- Facilitates robot deployment and integration in different scenarios, reducing the need for user intervention and sensor calibration.

.tmb-listing.jpg?Culture=en&sfvrsn=57e7d9a3_1)