Grand Challenge

The Grand Challenge Results are out.

CRPO is set to initiate the CRPO Grant Call – Grand Challenge to back the advancement of cyber technologies and innovation addressing tangible issues with transparency and accountability. The goal is to generate national benefits for Singapore.

The CRPO Grant Call is a competitive funding initiative aimed at fostering research projects that push the boundaries of cyber technologies within Singapore-based Institutes of Higher Learning (IHLs)1, Research Institutes (RIs)2, and Industry Partners3. We enthusiastically invite proposals from diverse and collaborative teams, including academics, researchers, scientists, engineers, domain experts, and other professionals, to contribute to the advancement of cutting-edge cyber technology science.

Grand Challenges Grants: This pillar aims to foster the creation of innovative approaches and groundbreaking research ideas that address challenges with substantial industrial and societal impact. These projects align with the strategic direction set by the government, holding national strategic importance in both the short and long term. Ideally, these projects correspond to current (if short-term) or future (if long-term) governmental challenges or initiatives. For instance, cybersecurity technologies may target and develop solutions for challenges in healthcare, focusing on awareness and education for children and the elderly. These initiatives supplement and leverage government programs such as the smart nation initiative.

1.1. Aligned with the aforementioned goals and directions, this research grant call will concentrate on cybersecurity research.

1.2. The proposed opportunities and focus areas shall include:

1.2.1. AI Security The proliferation of foundation models, such as OpenAI's GPT-4 [1] and PaLM [2], has significantly transformed the landscape of Natural Language Processing and Artificial Intelligence. By learning patterns from vast amounts of data, these models have demonstrated remarkable success in understanding and generating human-like text across diverse vertical industrial scenarios such as finance service, healthcare, electronics, and smart city management.

However, the widespread deployment of foundation models has engendered a series of novel cyber-attack challenges that could have profound consequences for society, security, and privacy. Addressing these challenges is crucial to ensure the responsible and safe use of foundation models in an era characterized by digital ubiquity.

1.2.1.1. Challenges:

1.2.1.1.1. Adversarial Manipulation: Adversarial attacks exploit vulnerabilities in foundation models by introducing carefully crafted input perturbations, leading to incorrect or misleading outputs [3]. These attacks pose a significant threat, potentially enabling malicious actors to generate deceptive content, evade detection, or manipulate automated systems.

1.2.1.1.2. Data Poisoning: Data poisoning attacks represent another significant challenge. Malicious actors may attempt to manipulate the training data used to build foundation models, embedding biased or misleading information. As a result, the model's outputs may inadvertently perpetuate stereotypes, misinformation, or discriminatory content. Data poisoning attacks not only undermine the reliability of foundation models but also erode societal trust and exacerbate existing biases.

1.2.1.1.3. Privacy Breaches: Foundation models, often trained on large and diverse datasets, might inadvertently expose private or sensitive information contained within their learned representations [4]. Ensuring robust privacy-preserving mechanisms during training and deployment is essential to prevent unintended data leakage.

1.2.1.1.4. Transfer Learning Vulnerabilities: Pre-trained foundation models are commonly fine-tuned for specific tasks, and such transfer learning can introduce vulnerabilities. Cyber-attacks targeting transfer learning processes can potentially compromise model performance, introduce bias, or lead to unintended behaviors [5].

1.2.1.2. Goals: To overcome the mentioned challenges, the primary goal of the Grand Challenge related to this subject is to investigate innovative and efficient resolutions that guarantee the responsible and secure use of foundational models in significant applications. The proposed solution should encompass the following dimensions.

1.2.1.2.1. Adaptive Adversarial Training: Incorporating adversarial training techniques [6] can enhance foundation model robustness against adversarial attacks, making the model more resistant to input perturbations.

1.2.1.2.2. Robust Data Validation: Implementing rigorous data validation and verification mechanisms can help detect and mitigate data poisoning attempts during the training phase.

1.2.1.2.3. Privacy-Preserving Architectures: Exploring privacy-preserving techniques, such as differential privacy or federated learning [7], can safeguard sensitive information while maintaining foundation model utility.

1.2.1.2.4. Fine-Tuning Security: Developing secure and auditable fine-tuning procedures can reduce the risk of vulnerabilities introduced during the transfer learning process [8].

References

[1] OpenAI, “GPT-4 Technical Report”, arXiv preprint arXiv:2303.08774 (2023).

[2] Chowdhery, Aakanksha, Sharan Narang, Jacob Devlin, Maarten Bosma, Gaurav Mishra, Adam Roberts, Paul Barham et al. "Palm: Scaling language modeling with pathways." arXiv preprint arXiv:2204.02311 (2022).

[3] Formento, Brian, Chuan-sheng Foo, Anh Tuan Luu, and See Kiong Ng. "Using Punctuation as an Adversarial Attack on Deep Learning-Based NLP Systems: An Empirical Study." In the Association for Computational Linguistics: EACL 2023, pp. 1-34. 2023.

[4] Zhao, Wayne Xin, Kun Zhou, Junyi Li, Tianyi Tang, Xiaolei Wang, Yupeng Hou, Yingqian Min et al. "A survey of large language models." arXiv preprint arXiv:2303.18223 (2023).

[5] Zhao, Shuai, Jinming Wen, Anh Tuan Luu, Junbo Zhao, and Jie Fu. "Prompt as Triggers for Backdoor Attack: Examining the Vulnerability in Language Models." arXiv preprint arXiv:2305.01219 (2023).

[6] Dong, Xinshuai, Anh Tuan Luu, Rongrong Ji, and Hong Liu. "Towards robustness against natural language word substitutions." In Proceedings of International Conference on Learning Representations (ICLR) (2021).

[7] Fowl, Liam, Jonas Geiping, Steven Reich, Yuxin Wen, Wojtek Czaja, Micah Goldblum, and Tom Goldstein. "Decepticons: Corrupted transformers breach privacy in federated learning for language models." arXiv preprint arXiv:2201.12675 (2022)

[8] Dong, Xinshuai, Anh Tuan Luu, Min Lin, Shuicheng Yan, and Hanwang Zhang. "How should pre-trained language models be fine-tuned towards adversarial robustness?." Advances in Neural Information Processing Systems (NeurIPS) (2021).

1.2.2. Secure and Private Data Sharing In the dynamic landscape of technology, data sharing [1] stands as a pivotal driver of innovation in AI driven intelligent systems [2], cloud computing [3], and blockchain [4]. Fueling AI's learning algorithms and predictive power, diverse datasets enhance accuracy across sectors like healthcare and finance. Cloud computing's scalability and collaboration thrive on shared data, fostering cost-effective and accessible solutions. Meanwhile, blockchain's decentralized ledger hinges on data sharing for transparent and secure transactions, impacting supply chains and finance. These intertwined technologies collectively rely on data sharing to break the data-silo and unlock groundbreaking advancements. In shaping the future, strategic data sharing remains integral to realizing the full potential of these transformative forces for the betterment of society. However, ethical, security and privacy concerns [5] underline the need for responsible implementation. It is vital to achieve security guards against breaches and cyber threats, while privacy safeguards protect personal information and uphold regulatory compliance. Simultaneously, prioritizing fairness prevents biased outcomes and discriminatory practices, particularly crucial in intelligent systems. Collectively, these principles foster trust, minimize risks, and sustain ethical practices in data sharing, nurturing a responsible technological landscape.

1.2.2.1. Challenges

1.2.2.1.1. Security of Data Sharing for Intelligent Systems: Rare existing approaches can guarantee the data confidentiality, integrity, and availability (CIA) [6] simultaneously to provide provable secure data sharing for AI driven intelligent systems.

1.2.2.1.2. Privacy Concerns in Cloud Computing: Numerous deployed applications tend to abuse user data, undermine privacy, and violate regulations (e.g., GDPR) [7]. Large-scale shared data introduce significant privacy concerns. Existing schemes lacks analyzable private data sharing framework with rich functionalities.

1.2.2.1.3. Reliable Fairness Guarantees: Reliable contribution evaluation and incentive mechanisms [8] for large-scale data sharing frameworks like Blockchain and Intelligent Systems lacks sufficient efforts, which is the foundation of project commercialization.

1.2.2.1.4. Efficiency Boosting: The co-design of algorithm and hard-ware acceleration is rarely touched in existing approaches. High efficiency is paramount for all the intelligent systems or novel computing paradigms such as Cloud and Blockchain.

1.2.2.2. Goals: To address the aforementioned obstacles, the primary objective of the Grand Challenge pertaining to this subject is to explore innovative and efficient resolutions that ensure the security, privacy, and fairness of the existing data sharing framework within significant applications. The proposed solution should encompass the subsequent dimensions.

1.2.2.2.1. Secure Data Sharing for Intelligent Systems: It aims to (1) develop confidential computing framework for the sharing of large-scale training data, (2) design formal integrity verification mechanisms for the diverse training and inference paradigms, (3) present provable robustness enhancing methods for various data sharing functions with confidential and integrity guarantees.

1.2.2.2.2. Private Data Sharing in Cloud Computing: It aims to (1) investigate e Provable private preserving algorithms with rich data sharing functionalities, (2) devise fine-grained and dynamic access control mechanism that can restrict data sharing to selected individuals/groups, (3) explore private large-scale data sharing as a service enabling diverse cloud driven applications.

1.2.2.2.3. Fairness Guarantees for Data Sharing in Blockchain and Intelligent Systems: It aims to (1) design reliable contribution evaluation mechanisms, (2) devise deployable incentive mechanisms for large-scale Blockchain and Intelligent System, (3) integrating provable security and privacy guarantees for fair data sharing.

References

[1] Kalkman S, Mostert M, Gerlinger C, et al. Responsible data sharing in international health research: a systematic review of principles and norms [J]. BMC medical ethics, 2019, 20: 1-13.

[2] Chen J, Sun J, Wang G. From unmanned systems to autonomous intelligent systems [J]. Engineering, 2022, 12: 16-19.

[3] Sun P J. Security and privacy protection in cloud computing: Discussions and challenges [J]. Journal of Network and Computer Applications, 2020, 160: 102642.

[4] Lu Y, Huang X, Dai Y, et al. Blockchain and federated learning for privacy-preserved data sharing in industrial IoT [J]. IEEE Transactions on Industrial Informatics, 2019, 16(6): 4177-4186.

[5] Makhdoom I, Zhou I, Abolhasan M, et al. PrivySharing: A blockchain-based framework for privacy-preserving and secure data sharing in smart cities [J]. Computers & Security, 2020, 88: 101653.

[6] Dylan D H. CIA and the Pursuit of Security: History, Documents and Contexts [M]. Edinburgh University Press, 2020.

[7] Lu C, Liu B, Zhang Y, et al. From WHOIS to WHOWAS: A Large-Scale Measurement Study of Domain Registration Privacy under the GDPR [C]. NDSS. 2021.

[8] Yin B, Wu Y, Hu T, et al. An efficient collaboration and incentive mechanism for Internet of Vehicles (IoV) with secured information exchange based on blockchains [J]. IEEE Internet of Things Journal, 2019, 7(3): 1582-1593.

1.3. The research team is responsible for defining the proposed research, problem scope, technical approach, and potential impacts of the proposal. Additionally, proposals must explicitly address the following key points:

- Alignment of the proposal with CRPO's objectives and direction.

- Explanation of the novelty of the research and the significant research challenge it aims to address.

- Clarification of potential industry applications or impact.

- Presentation of the translation plan.

- Explanation of the relevance of the research to Singapore.

2.1. CRPO intends to unveil two grand challenges aimed at soliciting innovative approaches to enhance the cybersecurity landscape of both Singapore and the global community. The initiative seeks breakthrough research ideas that can propel advancements in cybersecurity technologies. Each challenge offers funding of up to SGD$6,000,000 for a selected research team, supporting a duration of 2.5 to 3 years for each project.

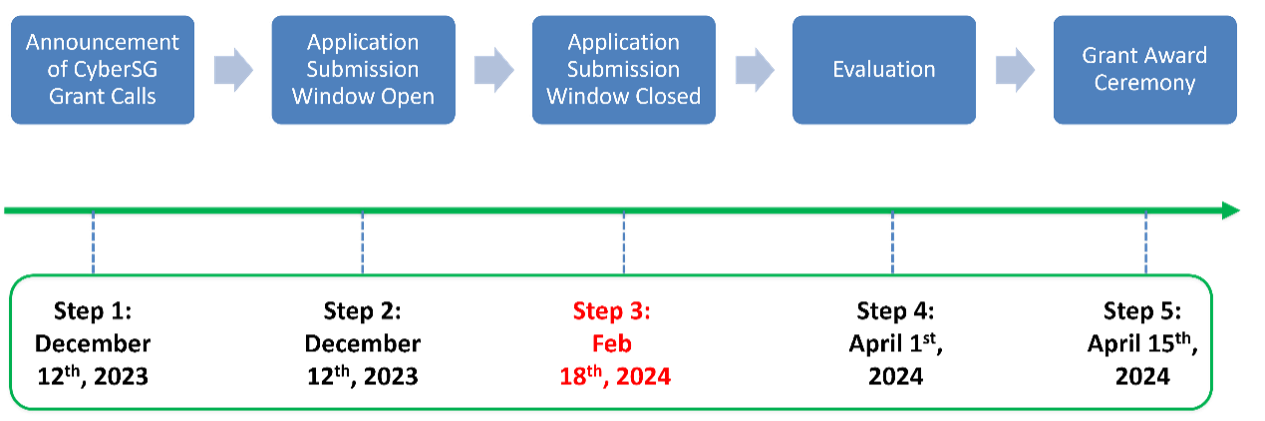

2.2. The first call was released on 12th December 2023. Please look at section 6 for the planned timeline of Grand Challenge Grants

2.3. The proposal shall be based on a realistic budget with appropriate justifications that correspond to the scope of work to be accomplished.

2.4. The corresponding budget requested includes 30% Indirect Research Costs (IRC).

2.5. The total cost of each project includes all approved direct costs4 and indirect research costs/overheads5. All expenditure budgeted should be inclusive of any applicable Goods and Services Taxes (GST) at the prevailing rates.

2.6. For all direct cost items proposed for the project, please refer to Annex C – Guidelines for the Management of CRPO Grants, including the list of “Non-Fundable Direct Costs” and note the following:

- Host Institutions must strictly comply with their own procurement practices.

- Host Institutions must ensure that all cost items are reasonable and are incurred under formally established, consistently applied policies and prevailing practices of the host institution.

- All items/services/manpower purchased/engaged must be necessary for the R&D work.

2.7. Research Scholarships are not eligible for support under the CRPO Grant Call.

2.8. Funds awarded cannot be used to support overseas R&D activities. All funding awarded must be used to carry out the research activities in Singapore.

3.1. The grant call is open to researchers from all Singapore-based Institutes of Higher Learning (IHLs), Research Institutes (RIs) and Industry Partners6.

3.2. At the point of application, the Principal Investigator (PI) must hold a fulltimme7 appointment in one of the eligible institutions. The PI must be a subject matter expert in the proposed domain, with strong record of publications in the proposed domain’s conferences and journals.

3.3. Lead PI must be from the IHLs or the RIs and the Co-PI can be from the Industry Partners.

3.4. If applicable, Co-PIs must hold a full-time appointment in one of the eligible institutions at the point of application. At least one of the Co-PIs must be a subject matter expert in the proposed domain. For industry partner, the Co-PIs must hold full-time appointments at the company.

3.5. Researchers from Medical Institutions8, start-ups in Singapore, private sector and other entities are eligible to apply as Collaborators.

3.6. Company collaboration(s) with in-kind contributions is encouraged, but not compulsory.

3.7. The team must have the right skills and experience to deliver the project and demonstrate sufficient engagement with stakeholders to scope the proposal.

3.8. The overseas collaborators and/or visiting experts may be invited to Singapore on short term engagements to assist with specific project tasks. In this arrangement, the costs of airfare, accommodation and per diem can be budgeted under the other operating expenses of the project.

3.9. Only research conducted in Singapore may be funded under CRPO Grant Call - Grand Challenge. Please refer to Annex B – Terms and Conditions of CRPO Grant.

3.10. Lead PI and Co-PIs should note that parallel submissions are not allowed – i.e., applicants must never send similar versions or part(s) of the current proposal application to other agencies or grants for funding (or vice versa).

Proposals should not be funded, or currently considered for funding, by other agencies. Details of all grants currently held or being applied for by the Lead PI and Co-PIs in related areas of research must be declared in Annex A - CRPO Grant Call Proposal Template.

4.1. Full proposals are to be submitted using the Proposal Template for CRPO Research Call in Annex A and must also adequately address the pointers stated in therein.

4.2. Full proposals will be reviewed by the Evaluation Committee, based on the quality of the proposals in the key aspects listed in paragraph 2.4 as well as the following:

• Past research accomplishments of the PI, Co-PI and any collaborators

• Project management plan

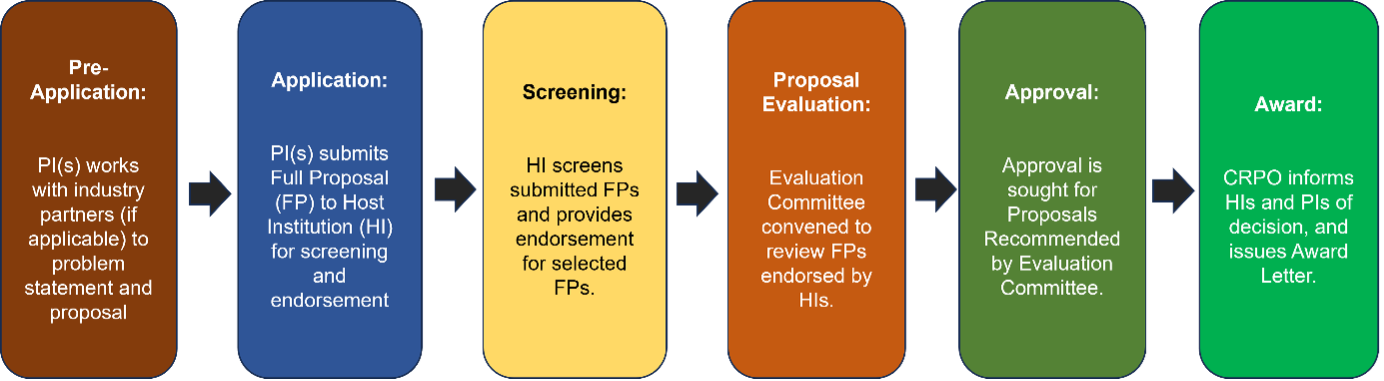

4.3. An illustration of the application, review and selection process is shown below:

4.4. The review will be carried out by the CRPO Evaluation Committee but based on reviews of the proposals solicited from local and overseas experts.

4.5. Reviewers should not be asked to review proposals from their affiliated institutions.

4.6. The review process is expected to take approximately 6 weeks. All decisions are final, and no appeals will be entertained.

4.7. The timeline9 for this CRPO Grant Call is shown below:

4.8. Please note that respective institutions’ internal deadline for full proposal submission may differ. However, all proposals selected and endorsed by the Host Institutions must be submitted in via email to [email protected] according to the above timeline.

4.9. CRPO reserves the right to reject late or incomplete submission, and submissions that do not comply with application instructions.

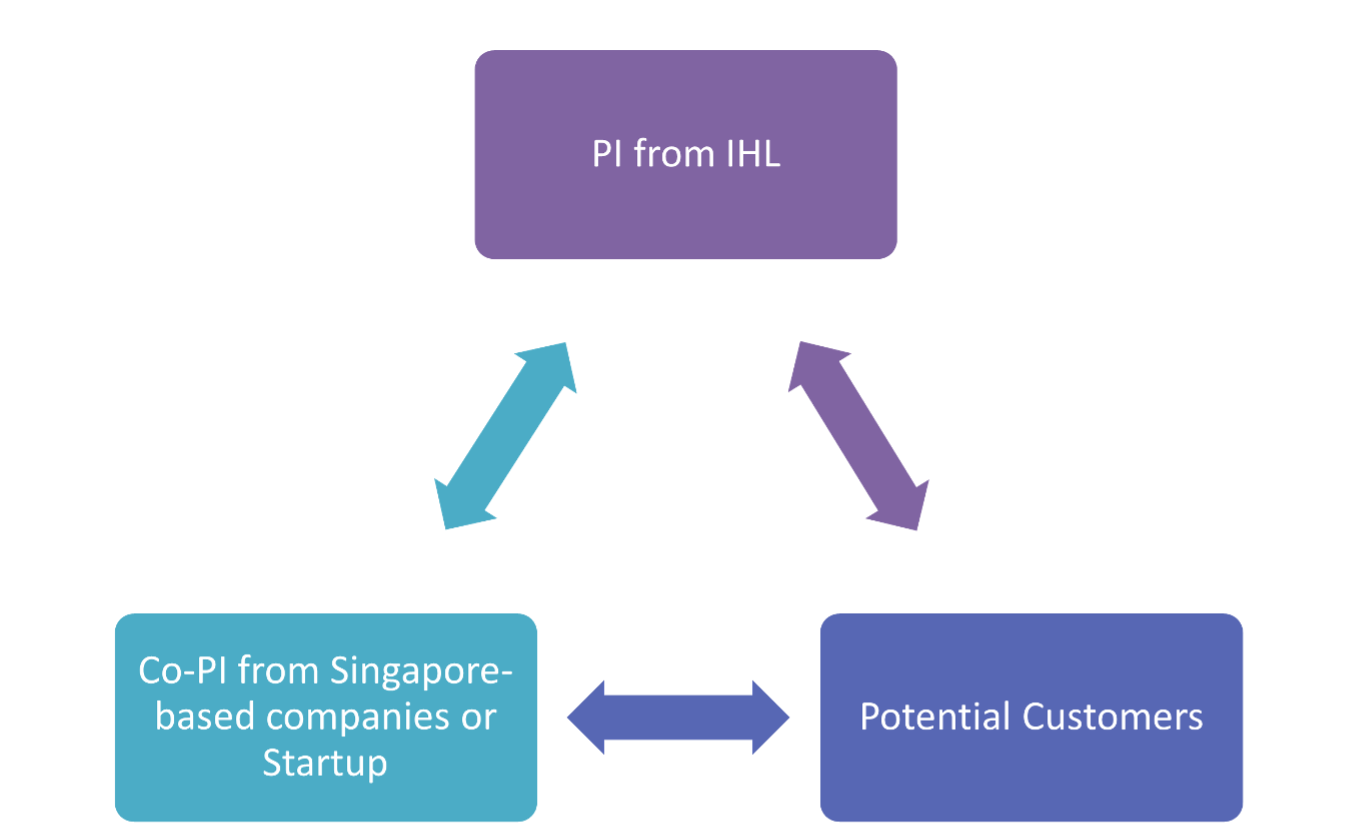

5.1. One Lead-PI from Singapore-based Institutes of Higher Learning (IHLs) and Research Institutes (RIs) to conduct fundamental research.

5.2. One industry Co-PI from Singapore-based companies to identify and translate IPs with commercial potential.

5.3. Potential customer collaborators who are willing to adopt the developed security solutions and provide requirement feedback.

5.4. An illustration of the team structure and the collaboration between the teams is shown below:

1 Institutes of Higher Learning (IHLs): National University of Singapore (NUS), Nanyang Technological University (NTU), Singapore Management University (SMU), Singapore University of Technology and Design (SUTD), Singapore Institute of Technology (SIT), Singapore University of Social Sciences (SUSS).

2 Research Institutes (RIs): Such as the Agency for Science, Technology and Research (A*STAR) institutes.

3 Industry Partners: Singapore based companies and Singapore based Start-ups.

4 Direct costs are defined as the incremental cost required to execute the project. This excludes in-kind contributions, existing equipment and the cost of existing manpower as well as building cost. Supportable direct costs can be classified into expenditure on manpower (EOM), expenditure on equipment (EQP), other operating expenses (OOE) and overseas travel (OT).

5 Indirect costs are expenses incurred by the research activity in the form of space, support personnel, administrative and facilities expenses, depending on the host institution’s prevailing policy. Host institutions will be responsible for administering and managing the support provided by CRPO for the indirect costs of research, if any.

6 National University of Singapore (NUS), Nanyang Technological University (NTU), Singapore Management University (SMU), Singapore University of Technology and Design (SUTD), Singapore Institute of Technology (SIT), Singapore University of Social Sciences (SUSS), A*STAR Research Institutes, Singapore based companies and Singapore based Start-ups.

7 Defined as at least 9 months of service a year based in Singapore or 75% appointment.

8 Researchers from Medical Institutions in Singapore who hold at least 25% joint appointment in a Singapore-based IHL and/or A*STAR RI may apply as Lead PI or Co-PI. If awarded, the grant will be hosted in the IHL / A*STAR RI.

9 Timeline is subject to change and based on CRPO decisions.

- CRPO Grant Call Rules and Guidelines - Focusing on the Grand Challenge of the CRPO Grant call series (Release date 16 January 2024)

- Annex A - Proposal Template for CRPO Grant Call (Release date 16 January 2024)

- Annex B - Terms and Conditions of CRPO Grant (Release date 16 January 2024)

- Annex C - Guidelines for the Management of CRPO Grant (Release date 16 January 2024)

- FAQs - CRPO Grant Call FAQ (Release date 16 January 2024)

- CRPO Grant Call Launch Presentation (Release date 16 January 2024)