Fast Semantic-Aware Motion State Detection for Visual SLAM in Dynamic Environment

Synopsis

vSLAM enables modern autonomous robotic systems to navigate safely. However, the performance of vSLAM is poor in realistic environments due to the presence of moving objects. This technology increases the robustness of vSLAM for both indoor and outdoor dynamic scenes in a lightweight manner.

Opportunity

Existing visual SLAM (vSLAM) systems struggle in dynamic environments as they cannot effectively ignore moving objects during pose tracking and mapping. This technology improves the robustness of existing feature-based vSLAM by accurately removing dynamic outliers that cause failures in pose estimation and mapping. The novel motion state detection method which identifies regions with high moving probability, is then fused with semantic information through a probability framework. This enables accurate and robust moving object extraction while retaining useful features for pose estimation and mapping. To reduce computational complexity, semantic information is extracted only on keyframes with significant image content changes, and semantic propagation is used to compensate for changes in intermediate frames using dense transformation maps and feature flow vectors. These techniques integrate seamlessly into existing vSLAM systems, enhancing their robustness in dynamic environments without significant computation cost.

Technology

This invention addresses the limitations of current vSLAM systems in dynamic environments by ignoring moving objects during pose estimation and mapping. The technology includes a novel motion state detection algorithm that uses depth and feature flow information to identify regions with high moving probability. This data is combined with semantic cues via a probability framework to accurately extract moving objects while preserving useful features for pose estimation and mapping. To manage computational complexity, semantic information is extracted only on keyframes with significant changes, with semantic propagation applied to intermediate frames using dense transformation maps and feature flow vectors. The proposed techniques enhance vSLAM systems' robustness in dynamic environments and can be implemented with minimal computational overhead. Extensive experiments on well-known RGB-D and stereo datasets demonstrate that this approach outperforms existing vSLAM methods in various dynamic scenarios, including crowded scenes. Testing on a low-cost embedded platform, such as the Jetson TX1, highlights the computational efficiency of this method.

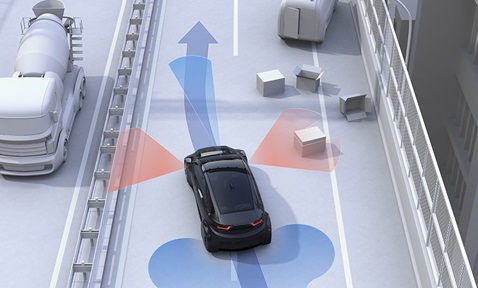

Figure 1: Visual SLAM process flow.

Figure 2: Visual SLAM flowchart.

Applications & Advantages

Main application areas include augmental reality, indoor and outdoor robotics, autonomous vehicles and unnamed air vehicles (UAVs).

Advantages:

- Enables vSLAM to operate seamlessly in high dynamic environments.

- Enhances vSLAM capability for use on edge devices with lower complexity.

- Accurately removes dynamic outliers, improving pose estimation and mapping.

- Efficiently extracts and propagates semantic information, reducing computational costs.

- Demonstrates superior performance in various dynamic scenarios with high computational efficiency.

.tmb-listing.jpg?Culture=en&sfvrsn=b5366f51_1)

.tmb-listing.jpg?Culture=en&sfvrsn=3b74ec1c_1)