Learning Large Neighborhood Search Policy for Integer Programming

- A deep reinforcement learning method to learn large neighborhood search policies for solving combinatorial optimization problems

Combinatorial optimization problems (COPs) have been widely studied in computer science and operations research, which cover numerous real-world tasks in many fields such as routing and scheduling. Most COPs are very difficult to solve efficiently due to their NP-hardness. On the other hand, machine learning can automatically generate heuristics or policies, which are expected to save massive manual work in algorithm design and raise the performance on COPs.

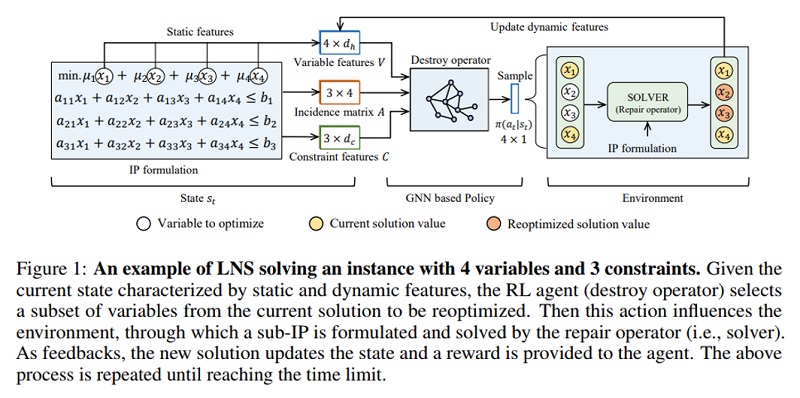

We tackle the following issue: how to improve a solver from externals via reinforcement learning so that it can find high-quality solutions for COPs more quickly.

- we learn large neighborhood search policies to guide the solving process of the solvers without getting access to their inner components

- we train the policy network as the destroy operator in LNS, which decides a subset of variables in the current solution for reoptimization

- the repair operator in LNS is any off-the-shelf solver for COPs

- the proposed method can be used to improve any solver in a high-level, without much intervention in the inner solving process of solvers

Previous learning based methods are generally limited to small instances and require sufficient interface access to the internal solving process of the solvers. Instead, our method can be deployed from externals conveniently.

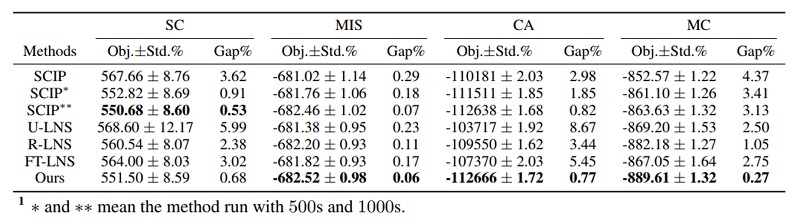

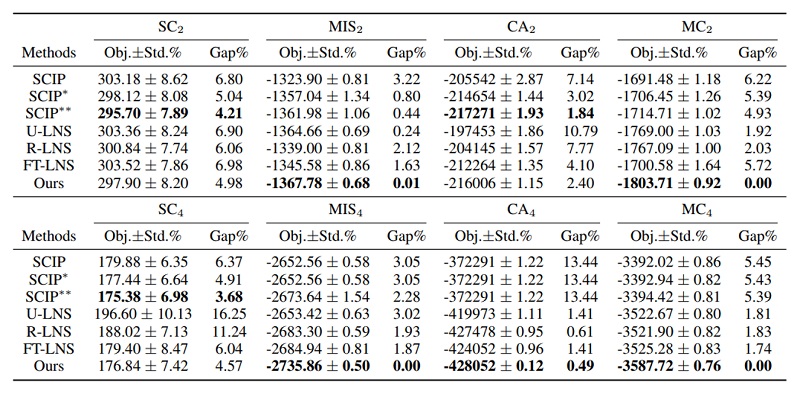

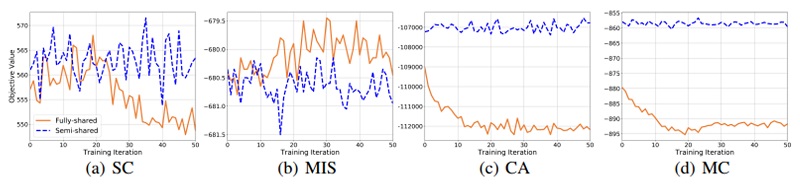

We perform experiments on four typical COPs: Set Covering (SC), Maximal Independent Set (MIS), Combinatorial Auction (CA) and Maximum Cut (MC). We compare with four baselines:

- SCIP with default settings

- U-LNS: a LNS version which uniformly samples a subset size, then fills it by uniformly sampling variables

- R-LNS, a LNS version which randomly groups variables into disjoint equal-sized subsets and reoptimizes them in order

- FT-LNS, a LNS version which applies forward training to mimic the best demonstrations collected from multiple R-LNS runs

Generalization to large instances with thousands of variables

Training curves on different COPs. The fully-shared network is used in our method.

Yaoxin Wu, Wen Song, Zhiguang Cao, and Jie Zhang. “Learning Large Neighborhood Search Policy for Integer Programming.” Advances in Neural Information Processing Systems 34 (2021). (Link)